Post Archive

Static Libraries in a Dynamic World

Creating more honest versions of wrapped APIs.

There is a common pattern when using bindings for C libraries that work against the dynamic language I'm working in: the conceptual packages in the library (which are exposed as header includes, and so provide no extra overhead to use in your code) are actually packages in the binding.

Unless they are flying in the face of good practices, the dynamic language user actually has to work harder to identify a specific function/variable than the static language user would!

The Problem

Let's look at an example Qt program written in C++:

1 2 3 4 5 6 7 8 9 | #include <QApplication> #include <QPushButton> int main(int argc, char *argv[]) { QApplication app(argc, argv); QPushButton hello("Hello world!"); hello.show(); return app.exec(); } |

If we translate directly to Python, we are left with code that feels not only too specific, but puts more of a burden on the programmer than they would have with the static version of the library since they need to know exactly where every object comes from:

1 2 3 4 5 6 7 | import sys from PyQt4 import QtGui app = QtGui.QApplication(sys.argv) hello = QtGui.QPushButton("Hello world!") hello.show() exit(app.exec_()) |

This is something I encounter all the time. For example, in PyQt/PySide, the Adobe Photoshop Lightroom SDK, PyOpenGL, Autodesk's Maya "OpenMaya", PyObjC use of Apple's APIs, etc..

Repairing Python Virtual Environments

When upgrading Python breaks our environment, we can rebuild.

Python virtual environments are a fantastic method of insulating your projects from each other, allowing each project to have different versions of their requirements.

They work (at a very high level) by making a lightweight copy of the system Python, which symlinks back to the real thing whenever necessary. You can then install whatever you want in lib/pythonX.Y/site-packages (e.g. via pip), and you are good to go.

Depending on what provides your source Python, however, upgrading it can break things. For example, I use Homebrew, which (under the hood) stores everything it builds in versioned directories:

$ readlink $(which python) ../Cellar/python/2.7.8_2/bin/python

Whenever there even a minor change to this Python, symlinks back to that versioned directory may not work anymore, which breaks my virtual environments:

$ python dyld: Library not loaded: @executable_path/../.Python Referenced from: /Users/mikeboers/Documents/Flask-Images/venv/bin/python Reason: image not found Trace/BPT trap: 5

There is an easy fix: manually remove the links back to the old Python, and rebuild the virtual environment.

Parsing Python with Python

(Ab)using the tokenize module.

A few years ago I started writing PyHAML, a Pythonic version of HAML for Ruby.

Since most of the HAML syntax is pretty straight forward, PyHAML's parser uses a series of regular expressions to get the job done. This proved generally inadequate anytime that there was Python source to be isolated, since Python isn't quite so straight forward to parse.

The earliest thing to bite me was nested parenthesis in tag definitions. In PyHAML you can specify a link via %a(href="http://example.com"), essentially treating the %a tag as a function which accepts keyword arguments. The very next thing you will want to do is include a function call, e.g. %a(href=url_for('my_endpoint')).

At this point, you are going to have A Bad Time™ with regular expressions as you can't deal with arbitrarily deep nesting. I "solved" this particular problem by scanning character by character until we have balanced the parenthesis, with something similar to:

1 2 3 4 5 6 7 | def split_balanced_parens(line): depth = 0 for pos, char in enumerate(line): depth += {'(': 1, ')': -1}.get(char, 0) if not depth: return line[:pos+1], line[pos+1:] return '', line |

And things were great with PyHAML for a long time, until a number of odd restrictions starting getting in the way. For example, you can't have a closing parenthesis in a string in a tag (like %img(title="A sad face looks like ):")), you can't have a colon in a control statement, and statements can't span lines via unbalanced brackets.

If only you could use Python to tokenize Python without fully parsing it...

Which Python?

Finding packages, modules, and entry points.

which is a handy utility for discovering the location of executables in your shell; it prints the full path to the first executable on your $PATH with the given name:

$ which python /usr/local/bin/python

In a similar stream, I often want to know the location of a Python package or module that I am importing, or what entry points are available in the current environment.

Lets write a few tools which do just that.

Where Does the `sys.path` Start?

Constructing Python's `import` path

Importing modules in Python seems simple enough on the surface: import mymodule looks across the sys.path until it finds your module. But where does the sys.path itself come from?

Sure, there is a $PYTHONPATH variable which "augments the default search path for module files", but what is the default search path, how is it "augmented", how does easy_install or pip fit into this, and where does my package manager install modules?

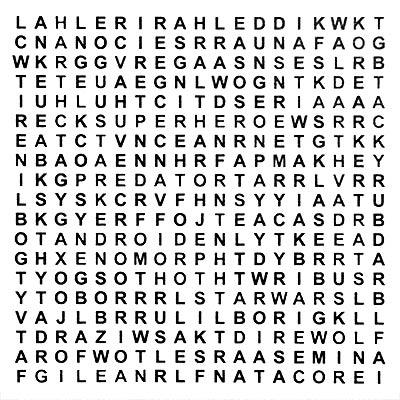

Dictionary Building for Word-Search

Mining Wikipedia for a "geek" lexicon.

One of the local pubs styles itself after geek/nerd culture (e.g. sci-fi, fantasy, and board games). The back of the coasters feature a word-search. It reports to contain 45 "geek words and phrases":

Writing code to solve a word-search isn't particularly tricky (if you remember to use a prefix trie) as long as you have a list of words to find, but in this case we are given no such clues.

But, since we are tremendously lazy, how can we solve this with code anyways?

Autocompleting Python Modules

Simplifying the search for modules to execute from a shell.

The last few times I overhauled an execution environment I required people to execute the bulk of their tools via python -m package.module instead of python package/module.py (to enable the development environment).

The downside is that you lose shell autocompletion, which can be a big deal if you have dozens of tools that you only occasionally use.

This addition to your ~/.bashrc fixes that.

@classproperty

Is this an amazing, or terrible idea?

Lets define a classproperty in Python such that it works as a property on both a class, and an instance:

class classproperty(object): def __init__(self, func): self.func = func def __get__(self, obj, cls): return self.func(cls, obj)

It can be used thusly:

class Example(object): @classproperty def prop(cls, obj): return obj or cls x = Example() assert x.prop is x assert Example.prop is Example

Is this a good idea, or a bad idea?

(Hint: I don't know.)

RenderMan Textures from Python

In order to better understand the guts of Python and RenderMan, in the past I have implemented a number of proof of concept projects extending or embedding each. Previously, I combined my efforts by embedding Python into RenderMan as an RSL shadeop so that shaders could be written in Python!

Unfortunately, that code is lost to the ages, so I decided to revisit my efforts and produce something that could actually have applications: using Python as a source of texture data for RenderMan.

Anatomy of a Maya Binary Cache

DAGs all the way down.

On a limited number of occasions I have had need to reach directly into some of the raw files produced by Autodesk's Maya. There isn't much documentation I could find on the web, so I will try to lay out what I have learned here.

The generic structure is based on the IFF format, but with enough small changes to warrant this exploration (with lots of kudos to cgkit's implementation, which helped with some of the gritty details).

View posts before March 13, 2013