Post Archive

Ultimate physical limits to computation

Lloyd, Seth. 2000. Ultimate physical limits to computation. Nature 406:1047–1054.

I just re-read part of this classic CS paper (PDF), and the figure captions at the back stood out to me as being particularly hilarious:

Figure 1: The Ultimate Laptop

The ‘ultimate laptop’ is a computer with a mass of one kilogram and a volume of one liter, operating at the fundamental limits of speed and memory capacity fixed by physics. [...] Although its computational machinery is in fact in a highly specified physical state with zero entropy, while it performs a computation that uses all its resources of energy and memory space it appears to an outside observer to be in a thermal state at approx. \( 10^9 \) degrees Kelvin. The ultimate laptop looks like a small piece of the Big Bang.

Figure 2: Computing at the Black-Hole Limit

The rate at which the components of a computer can communicate is limited by the speed of light. In the ultimate laptop, each bit can flip approx. \( 10^{19} \) times per second, while the time to communicate from one side of the one liter computer to the other is on the order of 10^9 seconds: the ultimate laptop is highly parallel. The computation can be sped up and made more serial by compressing the computer. But no computer can be compressed to smaller than its Schwarzschild radius without becoming a black hole. A one-kilogram computer that has been compressed to the black hole limit of \( R_S = \frac{2Gm}{c^2} = 1.485 \times 10^{−27} \) meters can perform \( 5.4258 \times 10^{50} \) operations per second on its \( I = 4\pi\frac{Gm2}{ln(2hc)} = 3.827 \times 10^{16} \) bits. At the black-hole limit, computation is fully serial: the time it takes to flip a bit and the time it takes a signal to communicate around the horizon of the hole are the same.

The Gooch Lighting Model

"A Non-Photorealistic Lighting Model For Automatic Technical Illustration"

I've recently been toying with the Gooch et al. (1998) non-photorealistic lighting model. Unfortunately, the nature of the project does not permit me to post any of the "real" results quite yet, but some of the tests have a nice look to them all on their own.

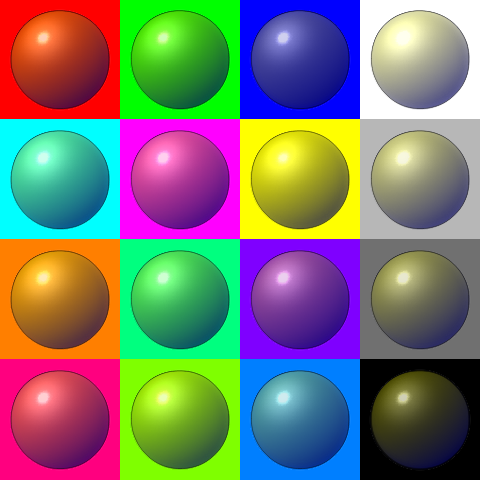

My implementation takes a normal map and colour map, e.g.:

This is the result from those inputs:

Cleaning Comments with Akismet

My site recently (finally) started to get hit by automated comment spam. There are few ways that one can traditionally deal with this sort of thing:

- Manual auditing: Manually approve each and every comment that is made to the website. Given the low volume of comments I currently have this wouldn't be too much of a hassle, but what fun would that be?

- Captchas: Force the user to prove they are human. ReCaptcha is the nicest in the field, but even it has been broken. But this doesn't stop human who are being paid (very little).

- Honey pots: Add an extra field1 to the form (e.g. last name, which I currently do not have) that is hidden by CSS. If it is filled out one can assume a robot did it and mark the comment as spam. This still doesn't beat humans.

- Contextual filtering: Use Baysian spam filtering to profile every comment as it comes in. By correcting incorrect profiles we will slowly improve the quality of the filter. This is the only automated method which is able to catch humans.

I decided to go with the last option, as offered by Akismet, the fine folks who also provide Gravatar (which I have talked about before). They have a free API (for personal use) that is really easy to integrate into whatever project you are working on.

Now it is time to try it out. I've been averaging about a dozen automated spam comments a day. With luck, none of them will show up here.

*crosses his fingers *

Update:

I was just in touch with Akismet support to offer them a suggestion regarding their documentation. Out of nowhere they took a look at the API calls I was making to their service and pointed out how I could modify it to make my requests more effective in catching spam!

That is spectacular support!

-

The previously linked article is dead as of Sept. 2014. ↩

New Demo Reel

For the first time since 2008, I have a new demo reel. This one finally has a quick breakdown of Blind Spot, and a lot of awesome shots from The Borgias.

Fangoria writes about "Blind Spot"

More love for Blind Spot, as Matt Nayman gave an interview for Fangoria that was just posted!

He spoke about me working on the film:

The most difficult part of BLIND SPOT to complete was postproduction. The movie owes a lot of its power to my wonderful postproduction supervisor and co-producer, Mike Boers. He’s a fantastic visual effects artist, and was integral to bringing this film to life even during the scripting stage. We spent about five months working together on the CGI and compositing, using some beefy home computers and a lot of state-of-the-art software. Five minutes is a long time for any visual effects shot to hold up, and ours had to fill two-thirds of the screen for the entire movie. I am very proud of the effects we achieved for BLIND SPOT on such a minuscule budget.

Thanks, Matt!

Torontoist writes about "Blind Spot"

The Torontoist just posted a short article on the Toronto After Dark Film Festival, mentioning Blind Spot as "one of [their] favorites this year". They write:

[The] short speaks for itself: composed of a single shot, the film took a day to shoot at Pie in the Sky but post-production special effects took eight months to complete, between Nayman and his longtime collaborator, Mike Boers. Nayman hoped to keep the film ambiguous, as it grapples with a man so engrossed with everyday minutiae he doesn’t notice an apocalypse occurring outside his car window. “I’m hoping,” he says, “that some people read it as sci-fi and some people read it as darkly funny.” Sharp and aptly observed, audiences will read it as good filmmaking either way.

"Blind Spot" Festival Run

Blind Spot, a short film by Matt Nayman and I, has been accepted to a number of festivals to screen in the near future!

So far it will be screening on:

- October 22nd in Austin, TX at the Austin Film Festival

- October 25th in Toronto, ON at the Toronto After Dark Film Festival (which I will be attending)

- At some point from November 3 to November 20 in Leeds, UK at the Leeds International Film Festival

After the film's premier I will finally be able to publicly show the film (I will post it here) and make long overdue updates to my VFX demo reel. [edit: no longer the case]

You can watch the film on its website!

RoboHash and Gravatar

I recently discovered a charming web service called RoboHash which returns an image of a robot deterministically as a function of some input text. Take a gander at a smattering of random robots:

These would make an awesome fallback as an avatar for those without a Gravatar set up, since it will always give you the same robot if you enter the same email address. So of course I implemented it for this site!

Canon XF100 to Apple ProRes

As lossless as I can manage it.

I have finally figured out a way to process my raw Canon XF100 video files into Apple ProRes. I'm not satisfied with Final Cut Pro's log-and-transfer function, because that seems to require the footage to be transfered directly from the camera/card. I want to hold on to the original MXF files and be able to process them at my leisure.

The many hidden versions in Dropbox

Dropbox has a lovely feature in which they retain multiple versions of your files in case you want to revert some recent changes you have made. When you log into their website they let you pick from approximately all of last month's versions of a given file. However, if you look at URL of the version previews they offer, there is something to notice; they look roughly like:

https://dl-web.dropbox.com/get/path/to/your/file.txt?w=abcdef&sjid=123456

The w=abcdef is the ID of your file, and the sjid=123456 is the version of that file. Even though the web interface only shows a limited number of versions, since the version numbers appear to start counting at 1 you can simply contruct your own URLs to grab versions that they would not normally allow.

If you are especially careful and copy your browser cookies you can even automate this process to grab all versions of a given file!

View posts before July 21, 2011